I often hear people asking around 'What is SAP HANA'?

My definition

SAP HANA is a database itself, and it only runs on SUSE Linux. It is RAM working on Write/Read Controller modules on Data Volume and Log Volume store in disk. When you write data to SAP HANA, it is written to the logs first then moved into RAM. The ‘disk’ here meaning file system on a Fusion-IO SLC or MLC nand flash card or RAID 10 controller with an array with SSD disks (depending on the hardware vendor). This sounds like a lot of Basis stuffs and yes if we see it from the sole angle of migrating the database from rdbms to in-memory, this is it , something new and exciting for Basis guys.

So what is in store for BW guys? Ok, here is where BW consultants need to pay attention to. You see SAP sees HANA as a game changer, in a way, there are so many potentials lies within the area of Business Analytic. So they introduce to you SAP HANA Studio. This is where the future RSA1 and DB02 take place. And the peak of the action happens when ECC itself sits on top of HANA and HANA Business Contents are made accessible directly from HANA Studio. If you are a BO guy, you already know data provisioning is available directly from HANA real time data straight into the dashboard or reports.

How about ABAPERs? HANA Studio itself is built from Eclipse. So if you are an application developer, having sound knowledge on Java is crucial as I can only imagine instead of developing workflow in webdynpro , we can do it in Java. Of course, on top of all this, we can't run away from the integration with ECC in which BADI and BAPI skillsets are always hot in that area. So ABAPERs, you are always in demand.

To get to the ultimate HANA landscape, just like any technology lifespan, there is transition and obsolete.

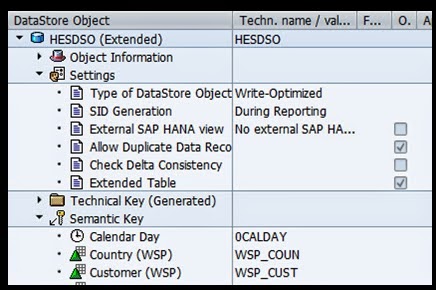

Well, let's not dwell into the word 'obsolete' in this case (Although I do believe it contributes to a lot of worry factors among BW consultants) .Focusing on the word 'transition', that is when we start to google up on words like HANA optimized Infocube, HANA optimized DSO, Semantic Partition, 'Sidecar' approach, SLT, DXC and the favourite for BPC-ABAP developers - Code Pushdown.

Quote from Vishal Sikka:

There is another possible reality. HANA offers an opportunity to rethink business processes. The business driving elements such as decentralisation and the explosion of interrest in mobile are in play. Cloud vendors are showing us important elements of the technology with which to get the job done. Despite what detractors might say, HANA really does have disruptive potential.

Here is a list of Google search results from various sources:

WIKI

SAP HANA is an in-memory, column-oriented, relational database management system developed and marketed by SAP AG.

Note:

Column-oriented organizations are more efficient when an aggregate needs to be computed over many rows.

Column-oriented organizations are more efficient when new values of a column are supplied for all rows at once.

Row-oriented organizations are more efficient when many columns of a single row are required at the same time.

Row-oriented organizations are more efficient when writing a new row if all of the row data is supplied at the same time.

SAPHANA.COM

SAP HANA is an in-memory data platform that is deployable as an on-premise appliance, or in the cloud. It is a revolutionary platform that’s best suited for performing real-time analytics, and developing and deploying real-time applications. At the core of this real-time data platform is the SAP HANA database which is fundamentally different than any other database engine in the market today.

Wikibon

SAP HANA Enterprise 1.0 is an in-memory computing appliance that combines SAP database software with pre-tuned server, storage, and networking hardware from one of several SAP hardware partners. It is designed to support real-time analytic and transactional processing.

Techopedia

SAP HANA is an application that uses in-memory database technology that allows the processing of massive amounts of real-time data in a short time. The in-memory computing engine allows HANA to process data stored in RAM as opposed to reading it from a disk. This allows the application to provide instantaneous results from customer transactions and data analyses.HANA stands for high-performance analytic appliance.

Searchsap

SAP HANA is a data warehouse appliance for processing high volumes of operational and transactional data in real-time. HANA uses in-memory analytics, an approach that queries data stored in random access memory (RAM) instead of on hard disk or flash storage.